Announcing SAGA: Skill to Action Generation for Agents.

Introduction

Today, we are announcing SAGA: Skill to Action Generation for Agents, a generative AI framework that steers AI Agents toward successful goals through Actions. SAGA is inspired in part by Joon Park’s Generative Agents paper where Agents inhabit the 2D simulated town of Smallville. As well as the work on Voyager from Jim Fan, et al, in which Agents have a set of predefined Skills they can choose from but can also create new skills as they perform tasks in the 3D game, MineCraft.

You can find the code on GitHub.

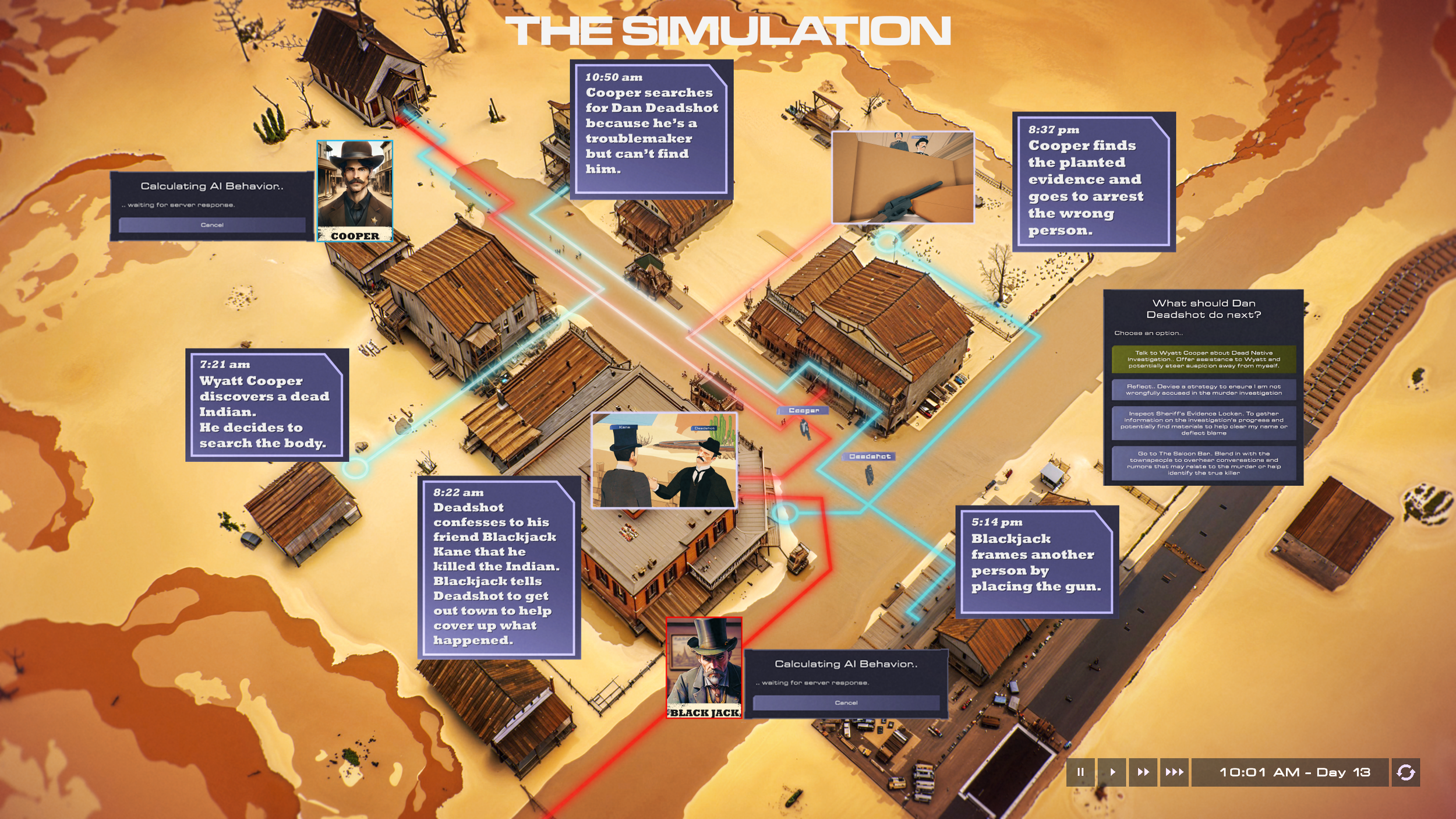

A Day in Thistle Gulch - Blackjack and Sheriff Cooper work to thwart each other’s plans

Skills in Action

With SAGA, Agents first tell SAGA contextual metadata about themselves and their world via a companion simulation: Who they are; What they know; What “Skills” they have; And what their goals are. Then, when an Agent is deciding what to do next, SAGA generates a set of “Actions” that best serve the Agent’s goals in that moment. These Action options are then scored and returned to the simulation in order to direct the Agent. This process repeats each time the Agent is deciding its next action and can be scaled to multiple agents running simultaneously.

An Action is just an implementation of a Skill as we will discuss. For now, think of Skills as a list of tools in a toolbox that the AI can employ in a specific way to advance a goal. For instance “Talk To” is a Skill, where an Agent can go talk to someone. If the Agent’s goal is to investigate a crime, then “Talk To <PERSON> about <TOPIC>” forms a specific Action the Agent can then carry out.

Examples

Examples of how Skills become Actions

Pairing With a Simulation

SAGA is B.Y.O.S.: Bring Your Own Simulation

SAGA is a B.Y.O.S. or Bring Your Own Simulation framework.

After responding with Action options, SAGA’s work is mostly done. It still receives information from the paired simulation it is connected to, but simulating the action itself, including generating conversations, or moving around an environment is left to the simulation to implement.

A set of starter Skills that leverage parts of our proprietary simulation (not currently publicly available) is provided, but other simulations, even very simple ones with only a few Skills, can be used as an alternative via the python library or by connecting to the framework over a network via its built-in socket-io server. See the Github repository for more information on documentation and integrating with your own simulation.

Using a preview of our Thistle Gulch simulation, let’s explore a more contextual example of how SAGA works in practice. Note that Thistle Gulch is still in development, so the details and visuals here are all subject to change. We will be announcing more about Thistle Gulch and “The Simulation” framework it’s built upon in the future.

Murder in Thistle Gulch

Thistle Gulch is a simulation of a fictional 1800’s Wild-West Town by the same name. It’s currently inhabited by 17 or so characters, but we’ll mainly first focus on two of them.

Personas

Let’s briefly explore Blackjack and Sheriff Cooper’s Personas. This is just a brief summary of their data. They also have detailed backstories and memories.

Blackjack vs Sheriff Cooper

Meta-Memories

In order to generate Actions, the simulation needs to provide SAGA with relevant details we call “Meta-Memories”. These are the sum of all the relevant metadata surrounding the simulated world and its Agents. Some of this information will only be available to specific characters, like memories and observations. Other information is shared across characters like the locations, interactable objects, and summaries of other characters.

Meta-Memories are loaded just before the simulation starts or are streamed in while the simulation is running, as events unfold. This includes details about the activities other characters are performing, their conversations, and new memories that are formed as the simulation progresses.

The Murder Investigation

The Sheriff considers what the evidence means

To test SAGA, we created an example scenario within Thistle Gulch where characters are forced to make choices about what to do next with clear and time sensitive goals, and constraints that confound those goals: The murder of a local native.

In our fictional scenario, a native from the local tribe was found dead just outside of town. The tribe’s chief has threatened to take things into his own hands if Sheriff Cooper doesn’t uncover the murderer quickly. Blackjack, the saloon owner and local gang leader, is also unsure who the murderer is, but he doesn’t want any investigation to blow back on him and ruin his plans to rob the stagecoach in a few weeks.

As the sun rises over Thistle Gulch on the start of Day 1, the Sheriff is standing next to the native’s remains and Blackjack is standing in his Saloon. Both are Agents and separately deciding what to do next via SAGA. The Meta-Memory of this 3 day window in which to solve the case encourages the characters to move forward in their own ways and achieve a timely conclusion.

Sheriff Cooper

Sheriff Wyatt Cooper, choosing from the list of Actions provided by SAGA, first decides to do the “Search Near the Dead Native” Action, which uses his “interact” Skill. The “interact” Skill has access to a list of all interactable simulation objects and what interactions, often human authored, they afford the agent. Here, the generated Action becomes “Interact with <Dead Native> and do the <Search Near the Dead Native> interaction that it provides”.

After discovering blood and bullet casings during that interaction, SAGA uses yet another skill called “reflect”. The Action becomes “Reflect on <The blood is fresh and there are bullet casings>” where he decides to get to the bottom of this immediately, as seen in this conversation with himself within the Thistle Gulch UI.

Sheriff’s Memories

Saloon Owner

For Blackjack’s Agent, SAGA leverages the “Reflect” Skill to generate the “Reflect on <Dead native situation affecting town stability>” Action. He then resolves to steer the investigation’s outcome in a direction that benefits himself and his plans.

Blackjack’s Memories

After Blackjack is done Reflecting, he needs to decide what skill to use next and how to use that skill. A request is sent to SAGA which returns the following Actions. Each Action has an associated Skill, as shown by the different icons in the image below. As each Action is generated, a given Skill’s parameters are filled in by SAGA’s generative model.

Action options generated for Blackjack

Cooperation and Conflict

Blackjack and Sheriff Cooper are now in conflict with each other. The Sheriff wants to solve the crime, and Blackjack wants to make sure the investigation doesn’t ruin his plans. They continue to navigate this situation and pursue their disparate goals by generating and then choosing Actions via SAGA as the simulation progresses.

As both Agents are pursuing their own ends, they are also cooperating with other Agents. The Sheriff talks to the townsfolk looking for leads and cooperation, while Blackjack conspires with his gang to plant evidence and spread rumors to throw him off.

They may also manipulate the world and not just people to their own ends. The Sheriff will move the native’s body into the jail to preserve the evidence or Blackjack and his goons will find something incriminating and plant that evidence in the scapegoat’s room to frame them for the murder.

The Thistle Gulch demo video above shows Blackjack cooperating with his gang to draw the sheriff’s attention away from his criminal schemes. While the dialogue is generated by our Simulation, who to talk to and what the topic and goal of the conversations are all generated via SAGA.

The sheriff and Blackjack are both leveraging SAGA to generate candidate Actions, but here the Sheriff is choosing the option with the highest score automatically. Blackjack pauses when SAGA returns the options so we can see them all. The top option is the highest scoring and is the default choice, but they can also be chosen manually as shown in the video. The video has been edited for time and viewability, but the run wasn’t cherry-picked.

Different Paths to the Goal

We don’t prescribe the guilty party here, so run-throughs vary. It mostly finds usual suspects like the brute, “Razor” Jim, or someone else related to Blackjack’s gang, but sometimes a miner is to blame or the Mayor or Gunsmith is caught up in it in some way for “greater good” reasons.

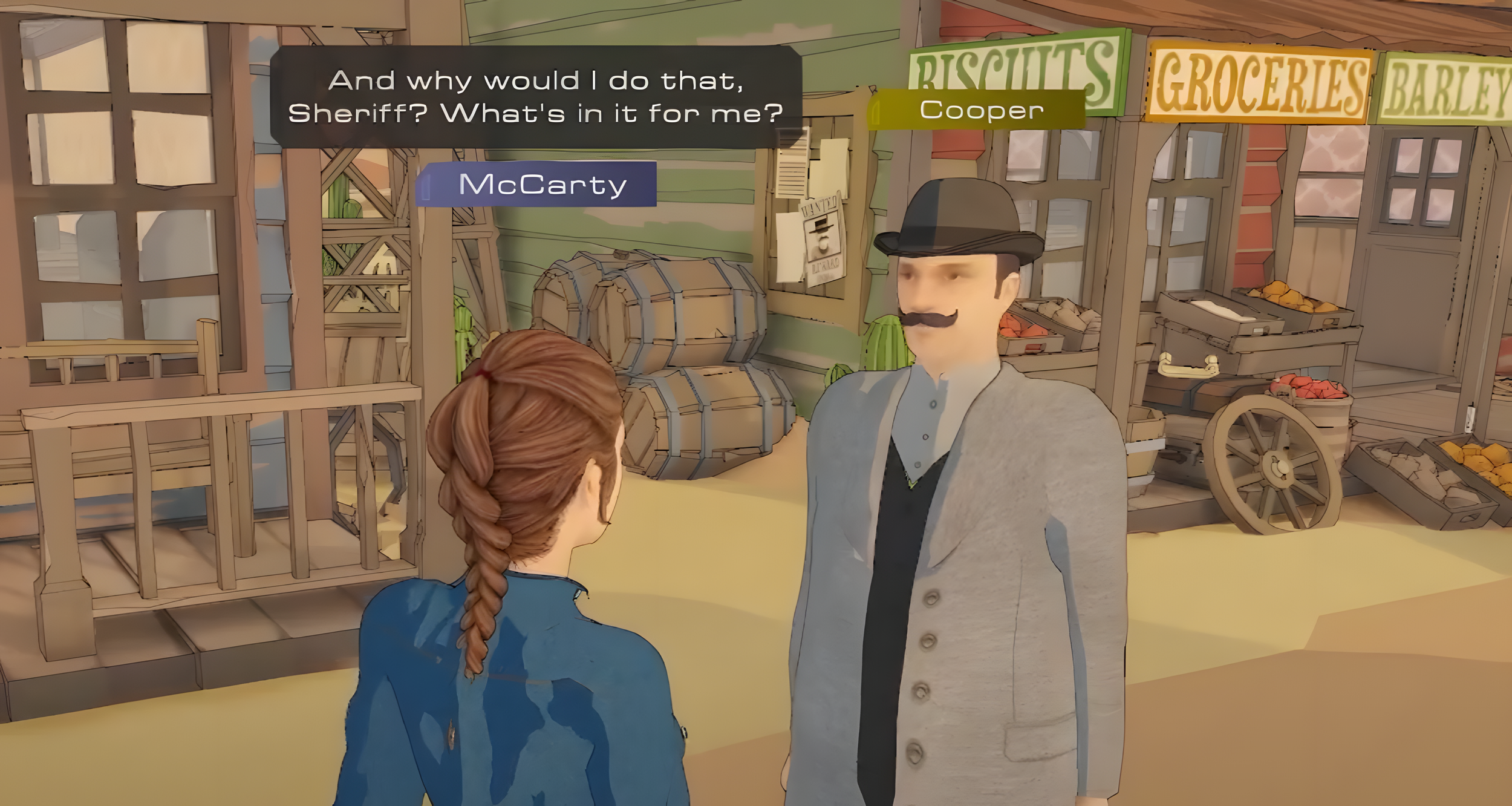

The interplay between characters can be quite interesting with a member of the gang trying to play both sides. Once, Sally McCarty had her loyalty for Blackjack tested when she felt he was keeping her in the dark. When repeatedly questioned by the Sheriff, she plays dumb but as her relationship with Blackjack deteriorates, she finally turns on him and starts spying for the Sheriff and eventually agrees to testify against him at trial.

Sheriff Cooper convinces Sally McCarty to betray Blackjack

While most of the time it goes somewhere useful, it can make connections or assumptions that turn out to be counter productive. For instance, even though the murder of the old Sheriff happened a year ago and the case has now gone cold, Wyatt may try to connect the native’s murder investigation back to that old case in a way that feels like he’s getting distracted. As someone with ADD, I know this can be realistic, but the audience will likely question that abrupt change in focus, and result in a less interesting narrative outcome.

The variation between different iterations of the simulation is based on the SAGA prompt and the LLM settings, but with so much contextual information available, the Agents reliably stay true to their characterization. The Action options that are generated, the scores of those actions, and then of course the simulation itself all have impacts that create the main opportunities for variation.

We often call this phenomenon “hallucination” on the part of the LLM, but Karpathy made a great point recently that, “hallucination is all LLMs do.” So, hallucination is not really the problem we need to solve, but the nature of the tool we’re using and “it’s greatest feature”. It’s up to us to best guide the model and handle the responses it provides us without locking it down so much that we don’t get what we actually want from it.

Andrej Karpathy Tweet on the “Hallucination Problem”

When using a robust simulation like ours, we take the responses that come back from SAGA and do additional validation on them like making sure they are using valid entities in their parameters before even considering them as options. We can also score options and even outcomes through multiple runs of the simulation to generate data for RLHF and fine-tune custom models on the behavior we want it to focus on next time.

Related Work

SAGA is leveraging a technique called “tool-use” popularized by the likes of AutoGPT and ChatGPT (Functions). Like these other projects, SAGA is a tool that builds upon the work of many others and shouldn’t be considered a research paper or fancy new model, but it does achieve very interesting results in a usable package for anyone to extend and experiment with.

In fact, the Skills definitions can be expanded to other domains like “Search The Web”, “Generate an Image”, or “Recall from Vector Database” if you like. Here, we are focusing on Actions that better match the simulation of human-like characters in a narrative context.

AI Planning in Context

Reinforcement Learning in which Agents leverage deep learning to play video games goes back a decade and more, but they have to learn how to play. With Large Language Models (LLMs), one simply has to provide contextual information, perhaps some examples, and then instruct the LLM to complete useful information to reach the goal. Of course this works best for domains where the LLM has seen representative training data, but in the case of human actions, this data is very common in the training set of the Web – we humans do like to talk about ourselves.

There is also a large space of work in what is generally called “AI Planning” which goes all the way back to the famous “Shakey the Robot” in the late 60s that could break a command down into individualized steps itself. Notably, “Shakey” research is where we get the A* algorithm and the Hough transform, a precursor to Convolutional Neural Nets. There is a lot of interest in using LLMs for Planning right now, be that in robotics, coding and more.

Language Models as Zero-Shot Planners

The closest analog for this work is probably the paper, “Language Models as Zero-Shot Planners: Extracting Actionable Knowledge for Embodied Agents” from Huang and Abbeel in Jan 2022 that generated action plans for tasks like “Brush teeth”. There, they fine-tuned an LLM on “admissible actions” or actions that could actually be recognized by the VirtualHome simulator instead of just free-form instructions that the simulator wouldn’t recognize.

Diagram from Language Models as Zero-Shot Planners

Currently, SAGA doesn’t generate a whole action plan of small steps, instead it generates a list of candidate next-step-actions that are very high-level and parameterizable. This allows the Model to focus on a smaller set of high-level tools that it can apply in a multitude of ways instead of using lower-level or very specific steps to construct an action plan. This has benefits that fine-tuning isn’t necessary and allows the simulation to take on more of the burden of providing and executing the actions, saving time and money for those using a model like GPT-4.

Generative Human-like Agents

In 2023, a lot of additional work has been done in this space, but we will highlight two for now that were also inspirational for how SAGA is architected, the terminology, and getting SAGA released. How SAGA may take a different approach than these projects is also highlighted.

Diagram from Generative Agents Paper

Generative Agents: Interactive Simulacra of Human Behavior - Park, et al Apr 2023. Here, Joon created a simple simulation in the browser using a 2D-grid game engine backed by a Python webserver. It also has another Python server running the code that drives the agents. The two communicate by saving a series of specially named files containing either the simulation data (locations of the agents in space), or what actions the agents should take in this step. All agents are evaluated each step and there is interesting work that explores observations, daily planning, and vector database storage/retrieval of memories.

Outside of research, that architecture makes it difficult to make use of in practice. The lock-step approach also takes a long time between steps and with the complex LLM re-processing overhead it can be very expensive to run for someone just looking to experiment.

SAGA takes a different approach. It’s available via socket.io so it communicates asynchronously over the web or on a local network. That means Agents and the Simulation don’t need to pause the world while waiting for Actions to be generated, though you can still do that if you like. It also provides a cleaner abstraction between the simulation and what is generating actions. SAGA does the generation based on requests for Actions, and the simulation is free to interpret those actions as it needs.

Interacting With and Planning in a 3D World

Diagram from Voyager Paper

Voyager: An Open-Ended Embodied Agent with LLMs - Jim Fan’s team May 2023. Shortly after “Generative Agents” in 2023 we got “Voyager”, a follow up to Fan’s Team’s “MineDojo” paper and tool of the same name from 2022. Together, they provide an open 3D world by connecting to the popular game MineCraft. MineCraft is the simulation environment and Voyager connects to it via an API that bridges the two called “MineFlayer”. There are no plans to connect SAGA to Minecraft, but pull requests are welcome!

Voyager also goes beyond the current scope of SAGA as it’s a research project focused on “Life-long learning” and creating new skills via code generation and refinement while essentially leveling the Agent up in Minecraft and learning to craft new things along the way.

However, Minecraft is a pretty limited simulator for human-like experiences, especially with multiple agents in dialog with each other. Thistle Gulch is mostly concerned with narrative outcomes, so we prioritize Actions that match the Agent’s personality, history, memory and relationships. Our Agents, Blackjack and the Sheriff, are also characters in a Narrative, so we want them to perform their narrative roles and achieve satisfying narrative outcomes as well.

Next Steps

What’s next for SAGA and where do we see it headed? As a v1 release, SAGA is functional but we will continue to support and improve it over time while looking to grow a community interested in it and its applications.

Challenges for Production Use

Generation Times

Right now, Generation on the latest GPT-3.5 takes around 3 seconds for 5 Action options, and GPT-4 takes around 30 seconds. The generation time is directly related to the number of generated tokens, not the input prompt so SAGA users should experiment which model and the number of options they actually need in their own context. Right now, this generation of options happens in one LLM request which optimizes input token costs, but it can be parallelized instead. For our demo video, we paused the world while waiting for options to generate and then cut that for time. SAGA already parallelizes the per-character requests so the number of characters doesn’t directly affect the wall-clock generation time.

Memory and Prompt Size Limits

SAGA keeps the memory model simple at this point, using lists and dictionaries in memory to index and retrieve the records provided by the simulation. Using LLMs with larger prompts and limiting the metadata to recent or important events is a way to expand the amount of Meta-Memories possible without hitting model context window limits. The latest GPT-4 OpenAI models have a 128K token limit now, nearly 100x larger than it was a few years ago.

To increase the number of Meta-Memories, say to increase the number of characters in the world, their relationships, their individual knowledge, and their memories of events, more complex storage and retrieval techniques are needed. Generation of embeddings to store and retrieve Meta-Memories with Vector DBs like FAISS, knowledge graphs, or even SQL databases to preserve memories are useful techniques to explore, but needs will vary and engineering is all about tradeoffs so we leave those to the user for now.

SAGA’s Future Goals

As the goal of SAGA is steering AI Agents toward successful goals through Actions, the next logical step is to improve those generated Actions. There’s a number of exciting research areas exploring this space lately.

For instance, SAGA already uses a “test-time compute” tradeoff technique of generating multiple candidate options and then validating and scoring the answers before choosing the best one as a way to get much better results without training a custom model. Related techniques include “Chain-of-thought” and “Tree-of-thought” or search based techniques used in AlphaGo where a model is trained to predict how good an option is likely to be through self-play.

Improving the Skills (the tools the model has to choose from) also increases the quality of the Actions. For instance, we are working on the “exchange” skill that allows characters to buy, sell, steal, and give items in their inventory. Creating new Skills via code generation like Voyager does, or at least chaining together existing skills to form action-plans is an exciting area of research as well.

Since SAGA doesn’t ship with a simulation out of the box in order to get started, we will be looking to add a simple text-based simulation to quickly test it out, but we think it’s important to keep the simulation itself separate from SAGA in order to be sim-agnostic. We will be looking for others who want to build their own simulation or use it with existing open source simulators and expect to see rapid support for simple 2D simulations that appeared after the Generate Agents paper.

Other simulations will have Agents within Gaming or Reinforcement Learning scenarios. Some simulations are engaged in RPG combat like the Neurips MMO Challenge happening this week. We’re excited to build upon SAGA, but also excited to see how the community uses it and contributes back. Check out the GitHub repo for more information.